우리 연구팀과 대구경북과학기술원 (DGIST)의 현정호, 유재석 교수 연구팀과 공등으로 수행하는 “노인성 난치 질환 치료를 위한 지능형 초음파 모니터링 및 BBBO 기반 약물전달기술 개발 및 MD-PhD 뇌융합 인재 양성” 프로그램이 DGIST-지역의과대학 글로벌 연구·교육 협력 프로그램에 과제선정 되었습니다.

우리 연구팀과 대구경북과학기술원 (DGIST)의 현정호, 유재석 교수 연구팀과 공등으로 수행하는 “노인성 난치 질환 치료를 위한 지능형 초음파 모니터링 및 BBBO 기반 약물전달기술 개발 및 MD-PhD 뇌융합 인재 양성” 프로그램이 DGIST-지역의과대학 글로벌 연구·교육 협력 프로그램에 과제선정 되었습니다.

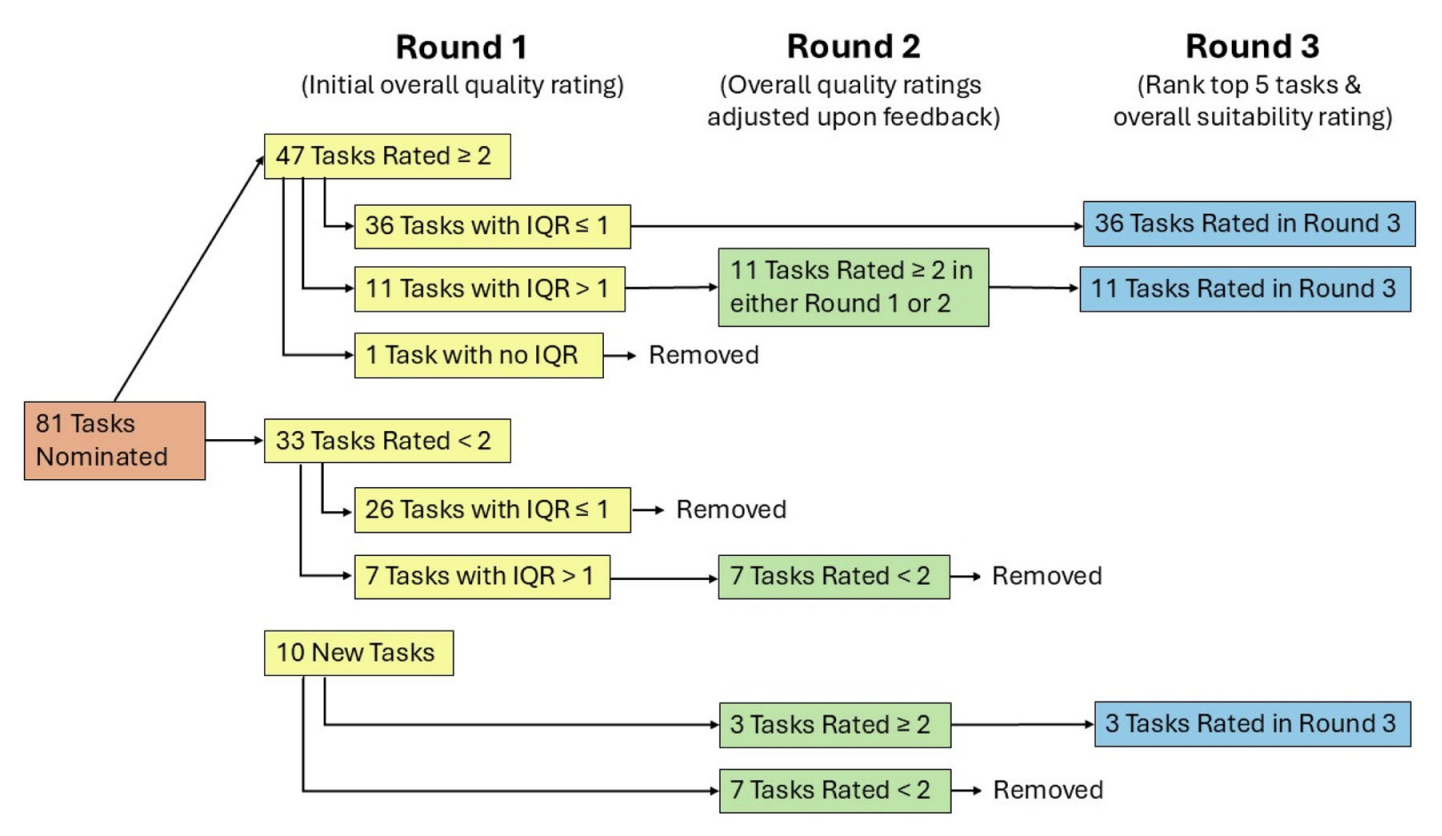

우리 연구팀이 SIRS Social Cognition Research Harmonization Group에 참여하여 공동으로 진행한 초진단적, 초문화적 사회인지 과제의 다국적 패널 컨센서스 연구가 출판되었습니다.

우리 연구팀이 서울대병원 하규섭, 안용민 교수 연구팀과 공동으로 수행한 논문이 출판되었습니다.

Identifying clinical and proteomic markers for early diagnosis and prognosis prediction of major psychiatric disorders. Lee H, Han D, Rhee SJ, Lee J, Kim J, Lee Y, Kim EY, Park DY, Roh S, Baik M, Jung HY, Lee TY, Kim M, Kim H, Kim SH, Kwon JS, Ahn YM, Ha K. J Affect Disord. 2025 Jan 15;369:886-896. doi: 10.1016/j.jad.2024.10.054.

https://linkinghub.elsevier.com/retrieve/pii/S0165-0327(24)01746-4

우리 연구팀이 참여한 COH-FIT 국제공동연구의 논문이 출판되었습니다.

Collaborative outcomes study on health and functioning during infection times (COH-FIT): Insights on modifiable and non-modifiable risk and protective factors for wellbeing and mental health during the COVID-19 pandemic from multivariable and network analyses. Solmi M, Thompson T, Cortese S, Estradé A, Agorastos A, Radua J, Dragioti E, Vancampfort D, Thygesen LC, Aschauer H, Schlögelhofer M, Aschauer E, Schneeberger A, Huber CG, Hasler G, Conus P, Cuénod KQD, von Känel R, Arrondo G, Fusar-Poli P, Gorwood P, Llorca PM, Krebs MO, Scanferla E, Kishimoto T, Rabbani G, Skonieczna-Żydecka K, Brambilla P, Favaro A, Takamiya A, Zoccante L, Colizzi M, Bourgin J, Kamiński K, Moghadasin M, Seedat S, Matthews E, Wells J, Vassilopoulou E, Gadelha A, Su KP, Kwon JS, Kim M, Lee TY, Papsuev O, Manková D, Boscutti A, Gerunda C, Saccon D, Righi E, Monaco F, Croatto G, Cereda G, Demurtas J, Brondino N, Veronese N, Enrico P, Politi P, Ciappolino V, Pfennig A, Bechdolf A, Meyer-Lindenberg A, Kahl KG, Domschke K, Bauer M, Koutsouleris N, Winter S, Borgwardt S, Bitter I, Balazs J, Czobor P, Unoka Z, Mavridis D, Tsamakis K, Bozikas VP, Tunvirachaisakul C, Maes M, Rungnirundorn T, Supasitthumrong T, Haque A, Brunoni AR, Costardi CG, Schuch FB, Polanczyk G, Luiz JM, Fonseca L, Aparicio LV, Valvassori SS, Nordentoft M, Vendsborg P, Hoffmann SH, Sehli J, Sartorius N, Heuss S, Guinart D, Hamilton J, Kane J, Rubio J, Sand M, Koyanagi A, Solanes A, Andreu-Bern… Eur Neuropsychopharmacol. 2025 Jan;90:1-15. doi: 10.1016/j.euroneuro.2024.07.010.

https://www.sciencedirect.com/science/article/pii/S0924977X2400186X?via%3Dihub

Zsh 스크립트는 셸 명령을 실행하거나 여러 명령을 일괄 처리하고, 관리 작업을 사용자 정의하거나 작업을 자동화하는 등 다양한 목적으로 사용될 수 있습니다. 따라서 맥/리눅스 사용자라면 Zsh 프로그래밍의 기초를 익혀 두는 것이 중요합니다. 이 글에서는 Zsh 스크립팅 언어의 기본 개념을 다루며, 자주 쓰이는 여러 가지 기능을 간단한 예제를 통해 설명합니다.

터미널에서 다음 명령을 직접 실행하면 “Hello World”라는 메시지가 출력됩니다.

$ echo "Hello World"

이제 간단한 Zsh 스크립트를 파일로 작성하고 실행해 보겠습니다. nano 등 편집기로 first.sh 파일을 만든 뒤 다음 내용을 입력하고 저장하세요. 터미널에서 vi 로 편집한 뒤 저장해도 됩니다.

#!/bin/zsh

echo "Hello World!"

스크립트를 실행하는 방법은 두 가지가 있습니다. 하나는 zsh 셸로 스크립트 파일을 실행하는 방법이고, 다른 하나는 파일에 실행 권한을 주고 직접 실행하는 방법입니다. 아래에 두 가지 방법을 모두 보여줍니다.

$ zsh first.sh

Hello World!

(또는 먼저 스크립트에 실행 권한을 준 후 실행할 수도 있습니다)

$ chmod a+x First.sh $ ./first.sh

echo 명령은 다양한 옵션과 함께 사용할 수 있습니다. 아래 예제에서는 자주 쓰이는 몇 가지 옵션을 보여줍니다. 옵션 없이 echo를 사용하면 출력 후 자동으로 줄바꿈이 들어갑니다. -n 옵션을 사용하면 출력 후 줄바꿈을 생략하고, -e 옵션을 사용하면 백슬래시(\)로 시작하는 이스케이프 문자를 해석하여 특수 문자를 처리할 수 있습니다.

예를 들어, echo_example.sh 라는 새 스크립트 파일에 다음과 같이 작성해 보겠습니다.

#!/bin/zsh

echo "Printing text with newline"

echo -n "Printing text without newline"

echo -e "\nRemoving \t backslash \t characters\n"

위 스크립트를 실행하면 각 옵션의 효과를 확인할 수 있습니다. 여기서 둘째줄을 실행하고 줄바꿈을 하지 않아야하지만, 셋째줄에 \n이 있기 때문에 줄바꿈을 합니다.

$ zsh echo_example.sh

Printing text with newline

Printing text without newline

Removing backslash characters

# 기호는 스크립트에서 한 줄 주석(comment)을 작성할 때 사용합니다. 한 줄에서 # 뒤에 오는 내용은 셸이 실행할 때 무시됩니다. 주석은 스크립트에 설명이나 메모를 남길 때 유용하게 활용됩니다.

예를 들어, comment_example.sh 파일을 만들고 아래와 같이 작성해 보겠습니다. 두 수를 더한 후 결과를 출력하는 스크립트이며, 각 단계 옆에 주석을 달았습니다.

#!/bin/zsh

# Add two numeric value

((sum=25+35))

#Print the result

echo $sum

이 스크립트를 실행하면 합계 60이 출력됩니다.

$ zsh comment_example.sh

60

Zsh 스크립트에는 여러 줄을 한꺼번에 주석 처리하는 구문이 따로 없지만, 우회적인 방법으로 여러 줄 주석을 넣을 수 있습니다. 그 중 한 가지 방법은 : (콜론) 명령과 따옴표를 사용하는 것입니다. : 명령은 아무 동작도 하지 않으므로, 그 뒤에 따옴표로 감싼 여러 줄의 텍스트를 넣으면 해당 텍스트는 실행되지 않고 무시됩니다. 이를 이용하면 여러 줄을 한꺼번에 주석처럼 처리할 수 있습니다.

예를 들어, multiline-comment.sh 파일에 다음과 같이 작성해 보겠습니다. 콜론과 따옴표를 활용해 여러 줄 설명을 주석으로 넣었으며, 이 스크립트는 5의 제곱값을 계산해 출력합니다.

#!/bin/zsh

: '

The following script calculates

the square value of the number, 5.

'

((area=5*5))

echo $area

위 스크립트를 실행하면 5*5의 계산 결과인 25가 출력됩니다.

$ zsh multiline-comment.sh

25

Zsh의 while 루프를 사용하면 주어진 조건이 참인 동안 계속해서 명령들을 반복 실행할 수 있습니다. 다음 예제로 이를 확인해보겠습니다. while_example.sh 스크립트에서는 while 루프를 통해 숫자를 5번 출력합니다. 처음에 count 변수를 1로 설정하고, 루프를 반복하면서 count를 1씩 증가시킵니다. 그리고 count가 5와 같아지면 break를 통해 루프를 종료합니다.

#!/bin/zsh valid=true count=1 while [ $valid ] do echo $count if [ $count -eq 5 ]; then break fi ((count++)) done

위 스크립트를 실행하면 1부터 5까지의 숫자가 한 줄씩 출력됩니다.

$ zsh while_example.sh

Zsh의 for 루프를 사용하면 정해진 목록이나 범위의 값들에 대해 반복 작업을 수행할 수 있습니다. 특히 C 언어 스타일의 for 문법도 사용 가능합니다. 다음 예에서는 for_example.sh 스크립트에서 C 스타일 for 문을 사용하여 counter 변수를 10부터 1까지 감소시키며 반복합니다. 루프가 실행되면서 counter 값을 공백으로 구분해 한 줄에 출력하며, 루프가 끝난 후 printf "\n"를 통해 줄바꿈을 추가합니다.

#!/bin/zsh for (( counter=10; counter>0; counter-- )) do echo -n "$counter " done printf "\n"

위 스크립트를 실행하면 10 9 8 ... 1과 같이 10부터 1까지의 숫자가 공백으로 구분되어 한 줄에 출력됩니다.

$ zsh for_example.sh

read 명령을 사용하면 사용자로부터 값을 입력받을 수 있습니다. 예를 들어, user_input.sh 파일에 다음과 같이 작성해 봅니다. 이 스크립트는 실행 시 사용자에게 이름을 입력하라고 요청한 후, 입력된 이름을 이용해 환영 메시지를 출력합니다.

#!/bin/zsh echo "Enter Your Name" read name echo "Welcome $name!"

이 스크립트를 실행한 뒤 이름을 입력하면, 터미널에 해당 이름이 포함된 환영 인사가 표시될 것입니다.

$ zsh user_input.sh

Zsh에서 if 조건문을 사용하여 특정 조건에 따라 명령을 실행할 수 있습니다. if 문의 시작 블록은 if, 끝은 fi로 표시합니다. 간단한 예로, 변수 n의 값에 따라 다른 메시지를 출력하는 스크립트를 작성해보겠습니다. 아래 simple_if.sh 스크립트에서는 n이 10보다 작으면 “It is a one digit number”를 출력하고, 그렇지 않으면 “It is a two digit number”를 출력합니다. 여기서 숫자 비교를 위해 -lt (less than)를 사용했으며, 이 외에도 -eq (equal), -ne (not equal), -gt (greater than) 등의 비교 옵션을 사용할 수 있습니다.

#!/bin/zsh n=10 if [ $n -lt 10 ]; then echo "It is a one digit number" else echo "It is a two digit number" fi

위 예에서는 n=10이므로 조건이 거짓이 되어, 실행 결과로 It is a two digit number

가 출력됩니다. (만약 n이 10보다 작았다면 첫 번째 문장이 출력되었을 것입니다.)

$ zsh simple_if.sh

if 문에서 두 가지 이상의 조건을 모두 만족하는지 검사하려면 논리 AND 연산자 &&를 사용할 수 있습니다. 다음 if_with_AND.sh 스크립트는 사용자에게 사용자 이름과 비밀번호를 입력받은 뒤, 입력값이 모두 지정된 문자열과 일치할 경우에만 “valid user”를 출력하고, 그렇지 않으면 “invalid user”를 출력합니다.

#!/bin/zsh echo "Enter username"

read username

echo "Enter password"

read password if [[ ( $username == "admin" && $password == "secret" ) ]]; then

echo "valid user"

else

echo "invalid user"

fi

예를 들어, 실행 시 사용자 이름에 admin 그리고 비밀번호에 secret를 입력하면 스크립트는 valid user

를 출력할 것입니다. 그 외의 다른 값이 입력되면 invalid user

를 출력합니다.

$ zsh if_with_AND.sh

두 개 이상의 조건 중 하나만 만족해도 참으로 간주하려면 논리 OR 연산자 ||를 사용할 수 있습니다. 다음 if_with_OR.sh 스크립트는 사용자에게 임의의 숫자를 입력받은 후, 그 숫자가 15이거나 45이면 “You won the game”을 출력하고 그렇지 않으면 “You lost the game”을 출력합니다.

#!/bin/zsh echo "Enter any number"

read n if [[ ( $n -eq 15 || $n -eq 45 ) ]]

then

echo "You won the game"

else

echo "You lost the game"

fi

예를 들어, 실행 중에 15나 45를 입력하면 You won the game

메시지가 표시되고, 다른 숫자를 입력하면 You lost the game

메시지가 표시됩니다.

$ zsh if_with_OR.sh

하나의 if 블록 안에서 여러 조건을 차례로 검사하려면 else if 구문을 사용하면 됩니다. Zsh 스크립트에서는 else if 대신 elif 라는 키워드를 사용합니다. 다음 elseif_example.sh 예제 스크립트는 사용자로부터 행운의 숫자를 입력받아, 입력된 숫자가 특정 값일 때 각각 1등, 2등, 3등 당첨 메시지를 출력하고 그 외의 경우에는 낙첨 메시지를 출력합니다.

#!/bin/zsh echo "Enter your lucky number"

read n if [ $n -eq 101 ];

then

echo "You got 1st prize"

elif [ $n -eq 510 ];

then

echo "You got 2nd prize"

elif [ $n -eq 999 ];

then

echo "You got 3rd prize"

else

echo "Sorry, try for the next time"

fi

예를 들어, 실행 시 101을 입력하면 1등 당첨 메시지(“You got 1st prize”)가 출력되고, 510을 입력하면 2등(“You got 2nd prize”), 999를 입력하면 3등(“You got 3rd prize”) 메시지가 출력됩니다. 그 외의 숫자를 입력한 경우에는 Sorry, try for the next time

라는 메시지가 표시될 것입니다.

$ zsh elseif_example.sh

다중 조건을 처리하는 또 다른 방법으로 case 구문을 사용할 수 있습니다. case 문은 주어진 값이 여러 패턴 중 어느 하나와 일치하는지 검사하여 해당 분기 코드를 실행하며, 복잡한 if-elif-else 구조를 대체하기에 유용합니다. case 블록의 시작은 case, 끝은 esac (case를 반대로 쓴 키워드)로 닫습니다.

이전 예제와 같은 기능을 case로 구현한 case_example.sh 스크립트를 살펴보겠습니다.

#!/bin/zsh echo "Enter your lucky number"

read n

case $n in

101)

echo "You got 1st prize" ;;

510)

echo "You got 2nd prize" ;;

999)

echo "You got 3rd prize" ;;

*)

echo "Sorry, try for the next time" ;;

esac

위 스크립트의 실행 결과는 앞선 if-elif 예제와 동일합니다. 예를 들어, 입력값이 101이면 You got 1st prize

, 510이면 You got 2nd prize

, 999이면 You got 3rd prize

메시지가 출력되며, 그 외의 입력에 대해서는 Sorry, try for the next time

메시지를 출력합니다.

$ zsh case_example.sh

스크립트를 실행할 때 인자를 함께 전달하면, 스크립트 내에서 해당 인자들을 활용할 수 있습니다. 각 인자는 특별한 변수로 접근할 수 있는데, $1은 첫 번째 인자, $2는 두 번째 인자를 가리키며 $#는 전달된 인자의 개수를 나타냅니다.

예제로, command_line.sh 스크립트를 작성해 보겠습니다. 이 스크립트는 전달된 인자의 총 개수와 첫 번째, 두 번째 인자의 값을 출력합니다.

#!/bin/zsh echo "Total arguments : $#" echo "1st Argument = $1" echo "2nd argument = $2"

예를 들어, 위 스크립트를 $ zsh command_line.sh Hello World처럼 두 개의 인자와 함께 실행하면, 총 인자 개수는 2이고 첫 번째 인자는 “Hello”, 두 번째 인자는 “World”임을 출력할 것입니다.

$ zsh command_line.sh Hello World

명령행 인자를 이름=값 형태로 전달받아 파싱할 수도 있습니다. 다음 command_line_names.sh 스크립트는 이런 형식의 인자를 처리하는 예제입니다. 스크립트는 전달된 모든 인자를 $@ 리스트로 받아 반복하면서, 각 인자를 = 기호 기준으로 나누어 이름과 값을 추출합니다. 그런 다음 이름이 X이면 변수 x에 값을 대입하고, 이름이 Y이면 변수 y에 값을 대입합니다. 마지막으로 두 값의 합을 계산하여 출력합니다.

#!/bin/zsh for arg in "$@" do index=$(echo $arg | cut -f1 -d=) val=$(echo $arg | cut -f2 -d=) case $index in X) x=$val;; Y) y=$val;; *) esac done ((result=x+y)) echo "X+Y=$result"

예를 들어, 위 스크립트를 $ zsh command_line_names.sh X=45 Y=30 처럼 실행하면 X+Y=75

라는 결과가 출력될 것입니다.

$ zsh command_line_names.sh X=45 Y=30

여러 문자열 변수를 결합하여 하나의 문자열로 만들 수 있습니다. 변수들을 공백 없이 나열하면 바로 이어붙여지고, += 연산자를 사용하면 기존 문자열에 새로운 문자열을 추가할 수 있습니다. 아래 string_combine.sh 예제에서 $string1$string2처럼 변수를 연속해서 사용하면 두 값이 그대로 연결되어 출력됩니다. 반면 string3=$string1+$string2처럼 변수 사이에 + 기호를 넣으면 플러스 기호가 문자열에 포함됩니다. 이후 string3+=... 구문을 사용하면 해당 변수에 문자열을 이어 붙일 수 있습니다.

#!/bin/zsh string1="Linux"

string2="Hint"

echo "$string1$string2"

string3=$string1+$string2

string3+=" is a good tutorial blog site"

echo $string3

이 스크립트를 실행하면 첫 번째 echo 명령으로 LinuxHint

가 출력되고, 두 번째 echo 명령으로 Linux+Hint is a good tutorial blog site

가 출력되는 것을 확인할 수 있습니다.

$ zsh string_combine.sh

Zsh에는 문자열에서 일부 내용을 잘라내는 전용 함수가 없지만, 변수 확장 기능을 사용하여 부분 문자열을 얻을 수 있습니다. 예를 들어 ${변수:시작:길이} 형식을 사용하면 지정한 위치에서 일정 길이만큼의 문자열을 추출합니다. (여기서 시작 위치는 0부터 센 인덱스입니다.) 아래 substring_example.sh 스크립트에서는 변수 Str에서 6번째 문자부터 5글자를 잘라내어 출력합니다.

#!/bin/zsh Str="Learn Linux from LinuxHint" subStr=${Str:6:5} echo $subStr

위 스크립트를 실행하면 Linux

가 출력됩니다.

$ zsh substring_example.sh

Zsh에서 산술 연산을 수행하는 방법은 여러 가지가 있습니다. 그 중 하나는 이중 괄호 (( )) 구문을 사용하는 것입니다. 다음 add_numbers.sh 스크립트는 사용자로부터 두 개의 정수를 입력받아 그 합을 계산한 후 결과를 출력합니다.

#!/bin/zsh echo "Enter first number" read x echo "Enter second number" read y (( sum=x+y )) echo "The result of addition=$sum"

예를 들어, 스크립트를 실행하여 5와 7을 입력하면 The result of addition=12

와 같은 출력이 나타납니다.

$ zsh add_numbers.sh

Zsh 스크립트에서 특정 작업을 묶어 함수(function)로 정의해두면 필요할 때마다 반복 사용하기 편리합니다. 함수는 function 이름() 또는 단순히 이름() 형태로 선언하며, 중괄호 { } 안에 함수가 수행할 명령들을 적습니다. 정의한 함수는 이후에 함수 이름만 써서 호출할 수 있습니다 (괄호 없이 함수명을 사용).

예를 들어, function_example.sh 스크립트에서 간단한 함수를 정의하고 호출해 보겠습니다.

#!/bin/zsh function F1() { echo 'I like Zsh programming' } F1

위 스크립트를 실행하면 함수 F1의 동작에 따라 I like Zsh programming

이라는 문장이 출력됩니다.

$ zsh function_example.sh

타 프로그래밍 언어와는 달리 Zsh의 함수는 정의 시 매개변수를 선언하지 않습니다. 그러나 함수를 호출하면서 값을 전달하면, 함수 내부에서 $1, $2와 같은 변수를 통해 전달된 인자 값을 참조할 수 있습니다. 즉, 호출 시 첫 번째 인자가 함수 내부에서는 $1으로, 두 번째 인자가 $2로 취급됩니다.

예를 들어, function_parameter.sh 스크립트에서 사각형의 넓이를 계산하는 함수를 정의하고 두 인자를 전달해 호출해 보겠습니다.

#!/bin/zsh Rectangle_Area() {

area=$(($1 * $2))

echo "Area is : $area"

} Rectangle_Area 10 20

위 스크립트를 실행하면 Rectangle_Area 10 20 함수 호출에 따라 Area is : 200

이 출력됩니다.

$ zsh function_parameter.sh

일반적으로 Zsh 함수는 문자열을 직접 반환하지 않지만, 함수 내에서 echo로 출력한 내용을 명령 치환($(...))을 통해 호출 측에서 변수에 담을 수 있습니다. 숫자 값의 경우 return 명령을 사용해 상태 코드($?로 확인)를 전달할 수 있지만, 문자열은 이처럼 출력 결과를 이용하는 방식으로 받아옵니다.

다음 function_return.sh 스크립트는 사용자에게 이름을 입력받은 후, greeting 함수에서 환영 문자열을 생성하여 출력하고, 그 값을 호출 부분에서 변수로 받아와 활용하는 예제입니다.

#!/bin/zsh function greeting() { str="Hello, $name"

echo $str } echo "Enter your name"

read name val=$(greeting)

echo "Return value of the function is $val"

예를 들어, 실행 중 이름으로 Alice를 입력하면 함수 내부에서 Hello, Alice

를 출력한 뒤, 마지막에 Return value of the function is Hello, Alice

라는 문자열을 출력합니다.

$ zsh function_return.sh

터미널 명령인 mkdir를 사용하면 새 디렉터리를 만들 수 있습니다. 다음 make_directory.sh 스크립트는 사용자에게 새 디렉터리 이름을 입력받은 후 mkdir 명령으로 해당 이름의 디렉터리를 생성합니다. (만약 동일한 이름의 디렉터리가 이미 존재하면 오류 메시지가 표시됩니다.)

#!/bin/zsh echo "Enter directory name" read newdir `mkdir $newdir`

이 스크립트를 실행하고 디렉터리 이름을 입력하면, 현재 경로에 해당 이름을 가진 새 디렉터리가 생성됩니다.

$ zsh make_directory.sh

디렉터리를 만들기 전에 같은 이름의 디렉터리가 이미 있는지 확인하고 싶다면 조건문에서 -d 옵션을 사용할 수 있습니다. -d는 괄호 안의 경로가 디렉터리로 존재하면 참(True)을 반환합니다. 다음 directory_exist.sh 스크립트는 입력받은 이름의 디렉터리가 현재 위치에 존재하는지 검사한 후, 존재하면 그 사실을 알리고 존재하지 않으면 mkdir로 디렉터리를 생성합니다.

#!/bin/zsh echo "Enter directory name" read ndir if [ -d "$ndir" ] then echo "Directory exist" else `mkdir $ndir` echo "Directory created" fi

이 스크립트를 실행하면, 해당 이름의 폴더가 이미 있을 경우 Directory exist

라고 출력되고 없을 경우 폴더를 생성한 후 Directory created

라고 출력합니다.

$ zsh directory_exist.sh

스크립트에서 read 명령과 반복문을 활용하면 텍스트 파일을 한 줄씩 읽어서 처리할 수 있습니다. 다음 read_file.sh 스크립트는 현재 디렉터리에 있는 book.txt 파일을 한 줄씩 읽어 화면에 출력하는 예제입니다.

#!/bin/zsh file='book.txt' while read line; do echo $line done < $file

위 스크립트를 실행하면 book.txt 파일의 내용을 각 줄마다 출력합니다.

$ zsh read_file.sh

rm 명령을 사용하여 파일을 삭제할 수 있습니다. 특히 -i 옵션을 주면 삭제 전에 사용자에게 확인을 요청합니다. 다음 delete_file.sh 스크립트는 사용자로부터 삭제할 파일 이름을 입력받아 해당 파일을 지우기 전에 확인하도록 합니다.

#!/bin/zsh echo "Enter filename to remove" read fn rm -i $fn

이 스크립트를 실행하고 파일 이름을 입력하면 그 파일을 삭제할지 묻는 메시지가 표시되며, y를 입력하여 확인하면 파일이 삭제됩니다.

$ zsh delete_file.sh

리다이렉션 연산자 >>를 사용하면 기존 파일의 끝에 새 데이터를 추가할 수 있습니다. 다음 append_file.sh 스크립트는 book.txt 파일에 한 줄의 텍스트를 덧붙이고, 추가 전과 추가 후의 파일 내용을 화면에 출력합니다.

#!/bin/zsh echo "Before appending the file"

cat book.txt echo "Learning Laravel 5" >> book.txt

echo "After appending the file"

cat book.txt

이 스크립트를 실행하면 우선 추가 전의 파일 내용을 보여주고, 새로운 한 줄을 추가한 뒤 파일의 변경된 내용을 확인시켜 줍니다.

$ zsh append_file.sh

-e 옵션은 파일이나 디렉터리의 존재 여부를 검사하며, -f 옵션은 해당 이름의 항목이 일반 파일인지 확인합니다. 다음 file_exist.sh 스크립트는 명령행 인자로 전달된 파일 이름이 현재 디렉터리에 존재하는지 검사한 후 결과를 출력합니다.

#!/bin/zsh filename=$1 if [ -f "$filename" ]; then echo "File exists" else echo "File does not exist" fi

예를 들어, 현재 디렉터리에 book.txt 파일이 있는 상태에서 $ zsh file_exist.sh book.txt를 실행하면 File exists

라는 메시지가 출력됩니다. 반면 존재하지 않는 파일 이름, 예를 들어 book2.txt를 인자로 주어 실행하면 File does not exist

라는 메시지가 출력됩니다.

$ zsh file_exist.sh book.txt $ zsh file_exist.sh book2.txt

리눅스에서 mail이나 sendmail 같은 도구를 사용하면 스크립트로 이메일을 보낼 수 있습니다. 이를 사용하려면 시스템에 해당 패키지가 설치되어 있고 메일 서버 설정이 되어 있어야 합니다. 다음 mail_example.sh 스크립트는 간단한 예로, 미리 지정한 수신인에게 제목과 메시지를 담은 이메일을 보내는 방법을 보여줍니다.

#!/bin/zsh Recipient="admin@example.com" Subject="Greeting" Message="Welcome to our site" `mail -s $Subject $Recipient <<< $Message`

메일 환경이 갖추어진 경우, 이 스크립트를 실행하면 admin@example.com 주소로 제목이 “Greeting”이고 내용이 “Welcome to our site”인 이메일이 전송됩니다.

$ zsh mail_example.sh

date 명령을 사용하여 시스템의 현재 날짜와 시간을 가져올 수 있으며, 다양한 포맷 옵션을 제공하고 있습니다. 예를 들어 %Y는 연도(4자리 년도), %m는 월(2자리), %d는 일(2자리), %H는 시(시간, 24시간제), %M는 분, %S는 초를 의미합니다. 다음 date_parse.sh 스크립트에서는 이러한 옵션을 사용하여 현재 날짜와 시간을 연-월-일 및 시:분:초 형식으로 출력합니다.

#!/bin/zsh Year=`date +%Y` Month=`date +%m` Day=`date +%d` Hour=`date +%H` Minute=`date +%M` Second=`date +%S` echo `date` echo "Current Date is: $Day-$Month-$Year" echo "Current Time is: $Hour:$Minute:$Second"

이 스크립트를 실행하면 먼저 현재 시스템 날짜와 시간이 하나의 문자열로 출력되고, 이어서 Current Date is: 일-월-년

형식과 Current Time is: 시:분:초

형식으로 날짜와 시간이 각각 표시됩니다.

$ zsh date_parse.sh

wait는 스크립트에서 생성된 백그라운드 프로세스가 종료될 때까지 실행을 일시 정지시키는 내장 명령어입니다. wait [PID] 형태로 특정 프로세스 ID를 지정하면 그 프로세스가 끝날 때까지 기다리고, 인자를 생략하면 현재 스크립트에서 실행한 모든 백그라운드 작업이 완료될 때까지 대기합니다.

다음 wait_example.sh 스크립트에서는 간단한 사용 예를 보여줍니다.

#!/bin/zsh echo "Wait command" & process_id=$! wait $process_id echo "Exited with status $?"

위 스크립트에서는 echo "Wait command" 명령을 백그라운드로 실행한 뒤, wait를 통해 해당 프로세스($process_id)가 종료될 때까지 기다립니다. 그 후 $?로 백그라운드 프로세스의 종료 상태 코드를 출력합니다.

$ zsh wait_example.sh

sleep 명령을 사용하면 일정 시간 동안 스크립트 실행을 멈출 수 있습니다. 초 단위(s)는 물론, m(분), h(시간), d(일)와 같이 지연 단위를 지정할 수도 있습니다. 다음 sleep_example.sh 스크립트는 5초 동안 대기하는 예제를 보여줍니다.

#!/bin/zsh echo "Wait for 5 seconds"

sleep 5

echo "Completed"

이 스크립트를 실행하면 먼저 Wait for 5 seconds

라는 메시지를 출력하고 약 5초간 대기한 후에 Completed

라는 메시지를 출력합니다.

$ zsh sleep_example.sh

이상으로, 자주 사용되는 Zsh 스크립팅 기초 예제 30가지를 살펴보았습니다. 간단한 예제를 직접 실행해 보면서 Zsh 셸 스크립트의 작동 방식을 익히면, 필요에 따라 보다 복잡한 작업도 스크립트로 자동화할 수 있을 것입니다.

References

| Cognitive Domain | Test |

| Speed of processing | Brief Assessment of Cognition in Schizophrenia (BACS): Symbol-Coding

Category Fluency: Animal Naming Trail Making Test: Part A |

| Attention/Vigilance | Continuous Performance Test—Identical Pairs (CPT-IP)* |

| Working memory (nonverbal) (verbal) |

Wechsler Memory Scale®—3rd Ed. (WMS®-III): Spatial SpanLetter–Number Span |

| Verbal learning | Hopkins Verbal Learning Test—Revised™ (HVLT-R™) |

| Visual learning | Brief Visuospatial Memory Test—Revised (BVMT-R™) |

| Reasoning and problem solving | Neuropsychological Assessment Battery® (NAB®): Mazes |

| Social cognition | Mayer-Salovey-Caruso Emotional Intelligence Test(MSCEIT™): Managing Emotions |

The Brain Imaging Data Structure (BIDS) standard

In the previous section, we pointed out that Nipype can be used to create reproducible analysis pipelines that can be applied across different datasets. This is true, in principle, but in practice, it also relies on one more idea: that of a data standard. This is because to be truly transferable, an analysis pipeline needs to know where to find the data and metadata that it uses for analysis. Thus, in this section, we will shift our focus to talking about how entire neuroimaging projects are (or should be) laid out. Until recently, datasets were usually organized in an idiosyncratic way. Each researcher had to decide on their own what data organization made sense to them. This made data sharing and reuse quite difficult because if you wanted to use someone else’s data, there was a very good chance you’d first have to spend a few days just figuring out what you were looking at and how you could go about reading the data into whatever environment you were comfortable with.

The BIDS specification

Fortunately, things have improved dramatically in recent years. Recognizing that working with neuroimaging data would be considerably easier if everyone adopted a common data representation standard, a group of (mostly) fMRI researchers convened in 2016 to create something now known as the Brain Imaging Data Standard, or BIDS. BIDS wasn’t the first data standard proposed in fMRI, but it has become by far the most widely adopted. Much of the success of BIDS can be traced to its simplicity: the standard deliberately insists not only on machine readability but also on human readability, which means that a machine can ingest a dataset and do all kinds of machine processing with it, but a human looking at the files can also make sense of the dataset, understanding what kinds of data were collected and what experiments were conducted. While there are some nuances and complexities to BIDS, the core of the specification consists of a relatively simple set of rules a human with some domain knowledge can readily understand and implement.We won’t spend much time describing the details of the BIDS specification in this book, as there’s already excellent documentation for that on the project’s website. Instead, we’ll just touch on a couple of core principles. The easiest way to understand what BIDS is about is to dive right into an example. Here’s a sample directory structure we’ve borrowed from the BIDS documentation. It shows a valid BIDS dataset that contains just a single subject:

project/

sub-control01/

anat/

sub-control01_T1w.nii.gz

sub-control01_T1w.json

sub-control01_T2w.nii.gz

sub-control01_T2w.json

func/

sub-control01_task-nback_bold.nii.gz

sub-control01_task-nback_bold.json

sub-control01_task-nback_events.tsv

sub-control01_task-nback_physio.tsv.gz

sub-control01_task-nback_physio.json

sub-control01_task-nback_sbref.nii.gz

dwi/

sub-control01_dwi.nii.gz

sub-control01_dwi.bval

sub-control01_dwi.bvec

fmap/

sub-control01_phasediff.nii.gz

sub-control01_phasediff.json

sub-control01_magnitude1.nii.gz

sub-control01_scans.tsv

code/

deface.py

derivatives/

README

participants.tsv

dataset_description.json

CHANGES

There are two important points to note here. First, the BIDS specification imposes restrictions on how files are organized within a BIDS project directory. For example, every subject’s data goes inside a sub-[id] folder below the project root —- where the sub- prefix is required, and the [id] is a researcher-selected string uniquely identifying that subject within the project ("control01" in the example). And similarly, inside each subject directory, we find subdirectories containing data of different modalities: anat for anatomical images; func for functional images; dwi for diffusion-weighted images; and so on. When there are multiple data collection sessions for each subject, an extra level is introduced to the hierarchy, so that functional data from the first session acquired from subject control01 would be stored inside a folder like sub-control01/ses-01/func.Second, valid BIDS files must follow particular naming conventions. The precise naming structure of each file depends on what kind of file it is, but the central idea is that a BIDS filename is always made up of (1) a sequence of key-value pairs, where each key is separated from its corresponding value by a dash, and pairs are separated by underscores; (2) a “suffix” that directly precedes the file extension and describes the type of data contained in the file (this comes from a controlled vocabulary, meaning that it can only be one of a few accepted values, such as "bold" or "dwi"); and (3) an extension that defines the file format.For example, if we take a file like sub-control01/func/sub-control01_task-nback_bold.nii.gz and examine its constituent chunks, we can infer from the filename that the file is a Nifti image (.nii.gz extension) that contains BOLD fMRI data (bold suffix) for task nback acquired from subject control01.Besides these conventions, there are several other key elements of the BIDS specification. We won’t discuss them in detail, but it’s good to at least be aware of them:

dataset_description.json file at the root level that contains basic information about the project (e.g., the name of the dataset and a description of its constituents, as well as citation information).BIDS Derivatives

The BIDS specification was originally created with static representations of neuroimaging datasets in mind. But it quickly became clear that it would also be beneficial for the standard to handle derivatives of datasets -— that is, new BIDS datasets generated by applying some transformation to one or more existing BIDS datasets. For example, suppose we have a BIDS dataset containing raw fMRI images. Typically, we’ll want to preprocess our images (for example, to remove artifacts, apply motion correction, temporally filter the signal, etc.) before submitting them to analysis. It’s great if our preprocessing pipeline can take BIDS datasets as inputs, but what should it then do with the output? A naive approach would be to just construct a new BIDS dataset that’s very similar to the original one, but replace the original (raw) fMRI images with new (preprocessed) ones. But that’s likely to confuse: a user could easily end up with many different versions of the same BIDS dataset, yet have no formal way to determine the relationship between them. To address this problem, the BIDS-Derivatives extension introduces some additional metadata and file naming conventions that make it easier to chain BIDS-aware tools (see the next section) without chaos taking hold.

The BIDS Ecosystem

At this point, you might be wondering: what is BIDS good for? Surely the point of introducing a new data standard isn’t just to inconvenience people by forcing them to spend their time organizing their data a certain way? There must be some benefits to individual researchers — and ideally, the community as a whole -— spending precious time making datasets and workflows BIDS-compliant, right? Well, yes, there are! The benefits of buying into the BIDS ecosystem are quite substantial. Let’s look at a few.

Easier data sharing and reuse

One obvious benefit we alluded to above is that sharing and re-using neuroimaging data becomes much easier once many people agree to organize their data the same way. As a trivial example, once you know that BIDS organizes data according to a fixed hierarchy (i.e., subject –> session –> run), it’s easy to understand other people’s datasets. There’s no chance of finding time-course images belonging to subject 1 in, say, /imaging/old/NbackTask/rawData/niftis/controlgroup/1/. But the benefits of BIDS for sharing and reuse come into full view once we consider the impact on public data repositories. While neuroimaging repositories have been around for a long time (for an early review, see {cite}van2001functional), their utility was long hampered by the prevalence of idiosyncratic file formats and project organizations. By supporting the BIDS standard, data repositories open the door to a wide range of powerful capabilities.

To illustrate, consider OpenNeuro — currently the largest and most widely-used repository of brain MRI data. OpenNeuro requires uploaded datasets to be in BIDS format (though datasets do not have to be fully compliant). As a result, the platform can automatically extract, display, and index important metadata. For example, the number of subjects, sessions, and runs in each dataset; the data modalities and experimental tasks present; a standardized description of the dataset; and so on. Integration with free analysis platforms like BrainLife is possible, as is structured querying over datasets via OpenNeuro’s GraphQL API endpoint.

Perhaps most importantly, the incremental effort required by users to make their BIDS-compliant datasets publicly available and immediately usable by others is minimal: in most cases, users have only to click an Upload button and locate the project they wish to share (there is also a command-line interface, for users who prefer to interact with OpenNeuro programmatically).

BIDS-Apps

A second benefit to representing neuroimaging data in BIDS is that one immediately gains access to a large, and rapidly growing, ecosystem of BIDS-compatible tools. If you’ve used different neuroimaging tools in your research -— for example, perhaps you’ve tried out both FSL and SPM (the two most widely used fMRI data analysis suites) —- you’ll have probably had to do some work to get your data into a somewhat different format for each tool. In a world without standards, tool developers can’t be reasonably expected to know how to read your particular dataset, so the onus falls on you to get your data into a compatible format. In the worst case, this means that every time you want to use a new tool, you have to do some more work.

By contrast, for tools that natively support BIDS, life is simpler. Once we know that fMRIPrep -— a very popular preprocessing pipeline for fMRI data {cite}Esteban2019-md -— takes valid BIDS datasets as inputs, the odds are very high that we’ll be able to apply fMRIPrep to our own valid BIDS datasets with little or no additional work. To facilitate the development and use of these kinds of tools, BIDS developed a lightweight standard for “BIDS Apps” {cite}Gorgolewski2017-mb. A BIDS App is an application that takes one or more BIDS datasets as input. There is no restriction on what a BIDS App can do, or what it’s allowed to output (though many BIDS Apps output BIDS-Derivatives datasets); the only requirement is that a BIDS App is containerized (using Docker or Singularity; see {numref}docker), and accept valid BIDS datasets as input. New BIDS Apps are continuously being developed, and as of this writing, the BIDS Apps website lists a few dozen apps.

What’s particularly nice about this approach is that it doesn’t necessarily require the developers of existing tools to do a lot of extra work themselves to support BIDS. In principle, anyone can write a BIDS-App “wrapper” that mediates between the BIDS format and whatever format a tool natively expects to receive data in. So, for example, the BIDS-Apps registry already contains BIDS-Apps for packages or pipelines like SPM, CPAC, Freesurfer, and the Human Connectome Project Pipelines. Some of these apps are still fairly rudimentary and don’t cover all of the functionality provided by the original tools, but others support much or most of the respective native tool’s functionality. And of course, many BIDS-Apps aren’t wrappers around other tools at all; they’re entirely new tools designed from the very beginning to support only BIDS datasets. We’ve already mentioned fMRIPrep, which has very quickly become arguably the de facto preprocessing pipeline in fMRI; another widely-used BIDS-App is MRIQC {cite}Esteban2017-tu, a tool for automated quality control and quality assessment of structural and functional MRI scans, which we will see in action in {numref}nibabel. Although the BIDS-Apps ecosystem is still in its infancy, the latter two tools already represent something close to killer applications for many researchers.

To demonstrate this statement, consider how easy it is to run fMRIPrep once your data is organized in the BIDS format. After installing the software and its dependencies, running the software is as simple as issue this command in the terminal:

fmriprep data/bids_root/ out/ participant -w work/

where, data/bids_root points to a directory that contains a BIDS-organized dataset that includes fMRI data, out points to the directory into which the outputs (the BIDS derivatives) will be saved and work is a directory that will store some of the intermediate products that will be generated along the way. Looking at this, it might not be immediately apparent how important BIDS is for this to be so simple, but consider what software would need to do to find all of the fMRI data inside of a complex dataset of raw MRI data, that might contain other data types, other files and so forth. Consider also the complexity that arises from the fact that fMRI data can be collected using many different acquisition protocols, and the fact that fMRI processing sometimes uses other information (for example, measurements of the field map, or anatomical T1-weighted or T2-weighted scans). The fact that the data complies with BIDS allows fMRIPrep to locate everything that it needs with the dataset and to make use of all the information to perform the preprocessing to the best of its ability given the provided data.

Utility libraries

Lastly, the widespread adoption of BIDS has also spawned a large number of utility libraries designed to help developers (rather than end users) build their analysis pipelines and tools more efficiently. Suppose I’m writing a script to automate my lab’s typical fMRI analysis workflow. It’s a safe bet that, at multiple points in my script, I’ll need to interact with the input datasets in fairly stereotyped and repetitive ways. For instance, I might need to search through the project directory for all files containing information about event timing, but only for a particular experimental task. Or, I might need to extract some metadata containing key parameters for each time-series image I want to analyze (e.g., the repetition time, or TR). Such tasks are usually not very complicated, but they’re tedious and can slow down development considerably. Worse, at a community level, they introduce massive inefficiency, because each person working on their analysis script ends up writing their own code to solve what are usually very similar problems.

A good utility library abstracts away a lot of this kind of low-level work and allows researchers and analysts to focus most of their attention on high-level objectives. By standardizing the representation of neuroimaging data, BIDS makes it much easier to write good libraries of this sort. Probably the best example so far is a package called PyBIDS, which provides a convenient Python interface for basic querying and manipulation of BIDS datasets. To give you a sense of how much work PyBIDS can save you when you’re writing neuroimaging analysis code in Python, let’s take a look at some of the things the package can do.

We start by importing an object called BIDSLayout, which we will use to manage and query the layout of files on disk. We also import a function that knows how to locate some test data that was installed on our computer together with the PyBIDS software library.

from bids import BIDSLayout

from bids.tests import get_test_data_path

One of the datasets that we have within the test data path is data set number 5 from OpenNeuro. Note that the software has not actually installed into our hard-drive a bunch of neuroimaging data — that would be too large! Instead, the software installed a bunch of files that have the right names and are organized in the right way, but are mostly empty. This allows us to demonstrate the way that the software works, but don’t try reading the neuroimaging data from any of the files in that directory. We’ll work with a more manageable BIDS dataset, including the files in it in {numref}nibabel.

For now, we initialize a BIDSLayout object, by pointing to the location of the dataset in our disk. When we do that, the software scans through that part of our file-system, validates that it is a properly organized BIDS dataset, and finds all of the files that are arranged according to the specification. This allows the object to already infer some things about the dataset. For example, the dataset has 16 subjects and 48 total runs (here a “run” is an individual fMRI scan). The person who organized this dataset decided not to include a session folder for each subject. Presumably, because each subject participated in just one session in this experiment, that information is not useful.

layout = BIDSLayout(get_test_data_path() + "/ds005")

print(layout)

BIDS Layout: ...packages/bids/tests/data/ds005 | Subjects: 16 | Sessions: 0 | Runs: 48

The layout object now has a method called get(), which we can use to gain access to various parts of the dataset. For example, we can ask to give us a list of the filenames of all of the anatomical ("T1w") scans that were collected for subjects sub-01 and sub-02

layout.get(subject=['01', '02'], suffix="T1w", return_type='filename')

['/home/runner/.local/lib/python3.10/site-packages/bids/tests/data/ds005/sub-01/anat/sub-01_T1w.nii.gz',

'/home/runner/.local/lib/python3.10/site-packages/bids/tests/data/ds005/sub-02/anat/sub-02_T1w.nii.gz']

Or, using a slightly different logic, all of the functional ("bold") scans collected for subject sub-03

layout.get(subject='03', suffix="bold", return_type='filename')

['/home/runner/.local/lib/python3.10/site-packages/bids/tests/data/ds005/sub-03/func/sub-03_task-mixedgamblestask_run-01_bold.nii.gz',

'/home/runner/.local/lib/python3.10/site-packages/bids/tests/data/ds005/sub-03/func/sub-03_task-mixedgamblestask_run-02_bold.nii.gz',

'/home/runner/.local/lib/python3.10/site-packages/bids/tests/data/ds005/sub-03/func/sub-03_task-mixedgamblestask_run-03_bold.nii.gz']

In these examples, we asked the BIDSLayout object to give us the 'filename' return type. This is because if we don’t explicitly ask for a return type, we will get back a list of BIDSImageFile objects. For example, selecting the first one of these for sub-03‘s fMRI scans:

bids_files = layout.get(subject="03", suffix="bold")

bids_image = bids_files[0]

This object is quite useful, of course. For example, it knows how to parse the file name into meaningful entities, using the get_entities() method, which returns a dictionary with entities such as subject and task that can be used to keep track of things in analysis scripts.

bids_image.get_entities()

{'datatype': 'func',

'extension': '.nii.gz',

'run': 1,

'subject': '03',

'suffix': 'bold',

'task': 'mixedgamblestask'}

In most cases, you can also get direct access to the imaging data using the BIDSImageFile object. This object has a get_image method, which would usually return a nibabel Nifti1Image object. As you will see in {numref}nibabel this object lets you extract metadata, or even read the data from a file into memory as a Numpy array. However, in this case, calling the get_image method would raise an error, because, as we mentioned above, the files do not contain any data. So, let’s look at another kind of file that you can read directly in this case. In addition to the neuroimaging data, BIDS provides instructions on how to organize files that record the behavioral events that occurred during an experiment. These are stored as tab-separated-values (‘.tsv’) files, and there is one for each run in the experiment. For example, for this dataset, we can query for the events that happened during subject sub-03‘s 3rd run:

events = layout.get(subject='03', extension=".tsv", task="mixedgamblestask", run="03")

tsv_file = events[0]

print(tsv_file)

<BIDSDataFile filename='/home/runner/.local/lib/python3.10/site-packages/bids/tests/data/ds005/sub-03/func/sub-03_task-mixedgamblestask_run-03_events.tsv'>

Instead of a BIDSImageFile, the variable tsv_file is now a BIDSDataFile object, and this kind of object has a get_df method, which returns a Pandas DataFrame object

bids_df = tsv_file.get_df()

bids_df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 85 entries, 0 to 84

Data columns (total 12 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 onset 85 non-null float64

1 duration 85 non-null int64

2 trial_type 85 non-null object

3 distance from indifference 0 non-null float64

4 parametric gain 85 non-null float64

5 parametric loss 0 non-null float64

6 gain 85 non-null int64

7 loss 85 non-null int64

8 PTval 85 non-null float64

9 respnum 85 non-null int64

10 respcat 85 non-null int64

11 RT 85 non-null float64

dtypes: float64(6), int64(5), object(1)

memory usage: 8.1+ KB

This kind of functionality is useful if you are planning to automate your analysis over large datasets that can include heterogeneous acquisitions between subjects and within subjects. At the very least, we hope that the examples have conveyed to you the power inherent in organizing your data according to a standard, as a starting point to use, and maybe also develop, analysis pipelines that expect data in this format. We will see more examples of this in practice in the next section.

Exercises

BIDS has a set of example datasets available in a GitHub repository at https://github.com/bids-standard/bids-examples. Clone the repository and use pyBIDS to explore the dataset called “ds011”. Using only pyBIDS code, can you figure out how many subjects participated in this study? What are the values of TR that were used in fMRI acquisitions in this dataset?

Additional resources

There are many places to learn more about the NiPy community. The documentation of each of the software libraries that were mentioned here includes fully worked-out examples of data analysis, in addition to links to tutorials, videos, and so forth. For Nipype, in particular, we recommend Michael Notter’s Nipype tutorial as a good starting point.

BIDS is not only a data standard but also a community of developers and users that support the use and further development of the standard. For example, over the time since the standard was first proposed, the community has added instructions to support the organization and sharing of new data modalities (e.g., intracranial EEG) and derivatives of processing. The strength of such a community is that, like open-source software, it draws on the individual strengths of many individuals and many groups who are willing to spend time and effort evolving and improving it. One of the resources that the community has developed to help people who are new to the standard learn more about it is the BIDS Starter Kit. It is a website that includes materials (videos, tutorials, explanations, and examples) to help you get started learning about and eventually using the BIDS standard.

In addition to these relatively static resources, users of both NiPy software and BIDS can interact with other members of the community through a dedicated questions and answers website called Neurostars. On this website, anyone can ask questions about neuroscience software and get answers from experts who frequent the website. In particular, the developers of many of the projects that we mentioned in this chapter, and many of the people who work on the BIDS standard often answer questions about these projects through this website.

Reference

Rokem A, Yarkoni T. Data Science for Neuroimaging: An Introduction. 2023.

Another set of tools that give us more control over our computational environment are tools that track changes in our software and analysis. If this seems like a boring or trivial task to you, consider the changes that you need to make to a program that you create to analyze your data, throughout a long project. Also, consider what happens when you work with others on the same project, and multiple different people can introduce changes to the code that you are using. If you have ever tried to do either of these things, you may have already invented your way to track changes to your programs. For example, naming the files with the date you made the most recent changes or adding the name of the author who made the most recent change to the filename. This is a form of version control — but it’s often idiosyncratic and error-prone. Instead, software tools for version control allow us to explicitly add a particular set of changes to the code that we are writing as we go along, keeping track for us of who made which changes and when. They also allow us to merge changes that other people are doing. If needed, it marks where changes we have made conflict with changes that others have made, and allows us to resolve these conflicts, before moving on

Getting started with git

One of the most widely-used software for version control is called “git”. Without going too much into the difference between git and other alternatives, we’ll just mention here that one of the reasons that git is very widely-used is because of the availability of services that allow you to use your version control to share your code with others through the web and to collaborate with them on the same code-base fairly seamlessly. A couple of the most popular services are GitHub and GitLab. After we introduce git and go over some of the basic mechanics of using git, we will demonstrate how you can use one of these services to collaborate with others and to share your code openly with anyone. This is an increasingly common practice and one that we will talk about more in sharing.

Git is a command-line application. Like the ls, cd, and pwd unix commands that you saw in unix, we use it in a shell application.

In contrast to the other shell commands you saw before, git can do many different things. To do that, git has sub-commands. For example, before you start using git, you need to configure it to recognize who you are using the `git config` sub-command. We need to tell git both our name and our email address. “`

$ git config --global user.name "Ariel Rokem" $ git config --global user.email arokem@gmail.com

This configuration step only needs to be done once on every computer on which you use git, and is stored in your home directory (in a file called ~/.gitconfig).

Exercise

Download and install git and configure it with your name and email address.

The next sub-command that we will usually use with git is the one that you will start your work with from now on — the sub-command to initialize a repository. A repository is a folder within your filesystem that will be tracked as one unit. One way to think about that is that your different projects can be organized into different folders, and each one of them should probably be tracked separately. So, one project folder becomes one git repository (also often referred to as a “repo” for short). Before starting to use git, we then make a directory in which our project code will go.

$ mkdir my_project $ cd my_project

The unix mkdir command creates a new directory called (in this case) my_project and the cd command changes our working directory so that we are now working within the new directory that we have created. And here comes the first git sub-command, to initialize a repository:

$ git init Initialized empty Git repository in /Users/arokem/projects/my_project/.git/

As you can see, when this sub-command is issued, git reports back that the empty repository has been created. We can start adding files to the repository by creating new files and telling git that these are files that we would like to track.

$ touch my_file.txt $ git add my_file.txt

We’ve already seen the touch bash command before — it creates a new empty file. Here, we’ve created a text file, but this can also be (more typically) a file that contains a program written in some programming language. The git add command tells git that this is a file that we would like to track — we would like git to record any changes that we make in this file. A useful command that we will issue early and often is the git status command. This command reports to you about the current state of the repository that you are working in, without making any changes to its content or state.

$ git status On branch master No commits yet Changes to be committed: (use "git rm --cached <file>..." to unstage) new file: my_file.txt

Here it is telling us a few things about the repository and our work within it. Let’s break this message down line by line. First of all, it tells us that we are working on a branch called master. Branches are a very useful feature of git. They can be thought of as different versions of the same repository that you are storing side by side. The default when a repository is initialized is to have only one branch called master. However, in recent years, git users have raised objections to the use of this word to designate the default branch, together with objections to other uses of “master”/”slave” designations in a variety of computer science and engineering (e.g., in distributed computing, in databases, etc.; to read more about this, see “Additional resources” at the end of this section). Therefore, we will immediately change the name of the branch to “main“.

$ git branch -M main

In general, it is a good practice to use not the default branch (now called main), but a different branch for all of your new development work. The benefit of this approach is that as you are working on your project, you can always come back to a “clean” version of your work that is stored on your main branch. It is only when you are sure that you are ready to incorporate some new development work that you merge a new development branch into your main branch. The next line in the status message tells us that we have not made any commits to the history of this repository yet. Finally, the last three lines tell us that there are changes that we could commit and make part of the history of the repository. This is the new file that we just added, and is currently stored in something called the “staging area”. This is a part of the repository into which we have put files and changes that we might put into the history of the repository next, but we can also “unstage” them so that they are not included in the history. Either because we don’t want to include them yet, or because we don’t want to include them at all. In parentheses git also gives us a hint about how we might remove the file from the staging area — that is, how we could “unstage” it. But for now, let’s assume that we do want to store the addition of this file in the history of the repository and track additional changes. Storing changes in the git repository is called making a commit, and the next thing we do here is to issue the git commit command.

$ git commit -m "Adds a new file to the repository" [main (root-commit) 1808a80] Adds a new file to the repository 1 file changed, 0 insertions(+), 0 deletions(-) create mode 100644 my_file.txt

As you can see, the commit command uses the -m flag. The text in quotes after this flag is the commit message. We are recording a history of changes in the files that we are storing in our repository, and alongside the changes, we are storing a log of these messages that give us a sense of who made these changes, when they made them, and — stored in these commit messages — what the intention of these changes was. Git also provides some additional information: a new file was created; the mode of the file is 664, which is the mode for files that can be read by anyone and written by the user (for more about file modes and permissions, you can refer to the man page of the chmod unix command). There were 0 lines inserted and 0 lines deleted because the file is empty and doesn’t have any files yet.

We can revisit the history of the repository using the git log command.

$ git log

This should drop you into a new buffer within the shell that looks something like this:

commit 1808a8053803150c8a022b844a3257ae192f413c (HEAD -> main) Author: Ariel Rokem <arokem@gmail.com> Date: Fri Dec 31 11:29:31 2021 -0800 Adds a new file to the repository

It tells you who made the change (Ariel, in this case), how you might reach that person (yes, that’s Ariel’s email address; git knows it because of the configuration step we did when we first started using git) and when the change was made (Almost noon on the last day of 2021, as it so happens). In the body of that entry, you can see the message that we entered when we issued the git command. At the top of the entry, we also have a line that tells us that this is the commit identified through a string of letters and numbers that starts with “1808a”. This string is called the “SHA” of the commit, which stands for “Simple Hashing Algorithm”. Git uses this clever algorithm to encode all of the information that was included in the commit (in this case, the name of the new file, and the fact that it is empty) and to encrypt this information into the string of letters and numbers that you see. The advantage of this approach is that this is an identifier that is unique within this repository because this specific change can only be made once 1. This will allow us to look up specific changes that were made within particular commits, and also allows us to restore the repository, or parts of the repository, back to the state they had when this commit was made. This is a particularly compelling aspect of version control: you can effectively travel back in time to the day and time that you made a particular change in your project and visit the code as it was on that day and time. This also relates to the one last detail in this log message: next to the SHA identifier, in parentheses is the message “

commit(HEAD -> main)“. The word “HEAD” always refers to the state of the repository in the commit that you are currently viewing. Currently, HEAD — the current state of the repository — is aligned with the state of the main branch, which is why it is pointing to main. As we continue to work, we’ll be moving HEAD around to different states of the repository. We’ll see the mechanics of that in a little bit. But first, let’s leave this log buffer — by typing the q key.

Let’s check what git thinks of all this.

$ git status On branch main nothing to commit, working tree clean

We’re still on the main branch. But now git tells us that there is nothing to commit and that our working tree is clean. This is a way of saying that nothing has changed since the last time we made a commit. When using git for your own work, this is probably the state in which you would like to leave it at the end of a working session. To summarize so far: we haven’t done much yet, but we’ve initialized a repository, and added a file to it. Along the way, you’ve seen how to check the status of the repository, how to commit changes to the history of the repository, and how to examine the log that records the history of the repository. As you will see next, at the most basic level of operating with git, we go through cycles of changes and additions to the files in the repository and commits that record these changes, both operations that you have already seen. Overall, we will discuss three different levels of intricacy. The first level, which we will start discussing next, is about tracking changes that you make to a repository and using the history of the repository to recover different states of your project. At the second level, we will dive a little bit more into the use of branches to make changes and incorporate them into your project’s main branch. The third level is about collaborating with others on a project.

Working with git at the first level: tracking changes that you make

Continuing the project we started above, we might make some edits to the file that we added. The file that we created,my_file.txt, is a text file and we can open it for editing in many different applications (we discuss text editors — used to edit code — in {numref}python-env). We can also use the unix echo command to add some text to the file from the command line.

$ echo "a first line of text" >> my_file.txt

Here, the >> operator is used to redirect the output of the echo command — you can check what it does by reading the man page — into our text file. Note that there are two > symbols in that command, which indicates that we would like to concatenate the string of characters to the end of the file. If we used only one, we would overwrite the contents of the file entirely. For now, that’s not too important because there wasn’t anything in the file, to begin with, but it will be important in what follows.

We’ve made some changes to the file. Let’s see what git says.

$ git status

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: my_file.txt

no changes added to commit (use "git add" and/or "git commit -a")

Let’s break that down again. We’re still on the main branch. But we now have some changes in the file, which are indicated by the fact that there are changes not staged for a commit in our text file. Git provides two hints, in parentheses, as to different things we might do next, either to move these changes into the staging area or to discard these changes and return to the state of affairs as it was when we made our previous commit.

Let’s assume that we’d like to retain these changes. That means that we’d like to first move these changes into the staging area. This is done using the git add sub-command.

$ git add my_file.txt

Let’s see how this changes the message that git status produces.

$ git status On branch main Changes to be committed: (use "git restore --staged <file>..." to unstage) modified: my_file.txt

The file in question has moved from “changes not staged for commit” to “changes to be committed”. That is, it has been moved into the staging area. As suggested in the parentheses, it can be unstaged, or we can continue as we did before and commit these changes.

$ git commit -m"Adds text to the file" [main 42bab79] Adds text to the file 1 file changed, 1 insertion(+)

Again, git provides some information: the branch into which this commit was added (main) and the fact that one file changed, with one line of insertion. We can look at the log again to see what has been recorded:

$ git log

Which should look something like the following, with the most recent commit at the top.

commit 42bab7959c3d3c0bce9f753abf76e097bab0d4a8 (HEAD -> main)

Author: Ariel Rokem <arokem@gmail.com>

Date: Fri Dec 31 20:00:05 2021 -0800

Adds text to the file

commit 1808a8053803150c8a022b844a3257ae192f413c

Author: Ariel Rokem <arokem@gmail.com>

Date: Fri Dec 31 11:29:31 2021 -0800

Adds a new file to the repository

Again, we can move out of this buffer by pressing the q key. We can also check the status of things again, confirming that there are no more changes to be recorded.

$ git status On branch main nothing to commit, working tree clean

This is the basic cycle of using git: make changes to the files, use git add to add them to the staging area, and then git commit` to add the staged changes to the history of the project, with a record made in the log. Let’s do this one more time, and see a couple more things that you can do along the way, as you are making changes. For example, let’s add another line of text to that file.

$ echo "another line of text"

We can continue in the cycle, with a git add and then git commit right here, but let’s pause to introduce one more thing that git can do, which is to tell you exactly what has changed in your repository since your last commit. This is done using the git diff command. If you issue that command:

$ git diff

you should be again dropped into a different buffer that looks something like this:

diff --git a/my_file.txt b/my_file.txt index 2de149c..a344dc0 100644 --- a/my_file.txt +++ b/my_file.txt @@ -1 +1,2 @@ a first line of text +another line of text

This buffer contains the information that tells you about the changes in the repository. The first few lines of this information tell you a bit about where these changes occurred and the nature of these changes. The last two lines tell you exactly the content of these changes: the addition of one line of text (indicated by a “+” sign). If you had already added other files to the repository and made changes across multiple different files (this is pretty typical as your project grows and the changes that you introduce during your work become more complex), different “chunks” that indicate changes in a different file, would be delineated by headers that look like the fifth line in this output, starting with the “@@” symbols. It is not necessary to run git if you know exactly what changes you are staging and committing, but it provides a beneficial look into these changes in cases where the changes are complex. In particular, examining the output of

diffgit diff can give you a hint about what you might write in your commit message. This would be a good point to make a slight aside about these messages and to try to impress upon you that it is a great idea to write commit messages that are clear and informative. This is because as your project changes and the log of your repository grows longer, these messages will serve you (and your collaborators, if more than one person is working with you on the same repository, we’ll get to that in a little bit) as guide-posts to find particular sets of changes that you made along the way. Think of these as messages that you are sending right now, with your memory of the changes you made to the project fresh in your mind, to yourself six months from now, when you will no longer remember exactly what changes you made and why.

At any rate, committing these changes can now look as simple as:

$ git add my_file.txt $ git commit -m"Adds a second line of text"

Which would now increase your log to three different entries:

commit f1befd701b2fc09a52005156b333eac79a826a07 (HEAD -> main)

Author: Ariel Rokem <arokem@gmail.com>

Date: Fri Dec 31 20:26:24 2021 -0800

Adds a second line of text

commit 42bab7959c3d3c0bce9f753abf76e097bab0d4a8

Author: Ariel Rokem <arokem@gmail.com>

Date: Fri Dec 31 20:00:05 2021 -0800

Adds text to the file

commit 1808a8053803150c8a022b844a3257ae192f413c

Author: Ariel Rokem <arokem@gmail.com>

Date: Fri Dec 31 11:29:31 2021 -0800

Adds a new file to the repository

As you have already seen, this log serves as a record of the changes you made to files in your project. In addition, it can also serve as an entry point to undoing these changes or to see the state of the files as they were at a particular point in time. For example, let’s say that you would like to go back to the state that the file had before the addition of the second line of text to that file. To do so, you would use the SHA identifier of the commit that precedes that change and the git checkout command.

$ git checkout 42bab my_file.txt Updated 1 path from f9c56a9

Now, you can open the my_file.txt file and see that the contents of this file have been changed to remove the second line of text. Notice also that we didn’t have to type the entire 40-character SHA identifier. That is because the five first characters uniquely identify this commit within this repository and git can find that commit with just that information. To re-apply the change, you can use the same checkout command, but use the SHA of the later commit instead.

$ git checkout f1bef my_file.txt Updated 1 path from e798023

One way to think of the checkout command is that as you are working on your project git is creating a big library that contains all of the different possible states of your filesystem. When you want to change your filesystem to match one of these other states, you can go to this library and check it out from there. Another way to think about this is that we are pointing HEAD to different commits and thereby changing the state of the filesystem. This capability becomes increasingly useful when we combine it with the use of branches, which we will see next.

Working with git at the second level: branching and merging

As we mentioned before, branches are different states of your project that can exist side by side. One of the dilemmas that we face when we are working on data analysis projects for a duration of time is that we would like to be able to rapidly make experiments and changes to the code, but we often also want to be able to switch over to a state of the code that “just works”. This is exactly the way that branches work: Using branches allows you to make rapid changes to your project without having to worry that you will not be able to easily recover a more stable state of the project. Let’s see this in action in the minimal project that we started working with. To create a new branch, we use the git command. To start working on this branch we check it out from the repository (the library) using the

branchgit checkout command.

$ git branch feature_x $ git checkout feature_x

$ echo "this is a line with feature x" >> my_file.txt $ git add my_file.txt $ git commit -m"Adds feature x to the file"

Examining the git log you will see that there is an additional entry in the history. For brevity, we show here just the two top (most recent) entries, but the other entries will also be in the log (remember that you can leave the log buffer by pressing the q key).

commit ab2c28e5c08ca80c9d9fa2abab5d7501147851e1 (HEAD -> feature_x)

Author: Ariel Rokem <arokem@gmail.com>

Date: Sat Jan 1 13:27:48 2022 -0800

Adds feature x to the file

commit f1befd701b2fc09a52005156b333eac79a826a07 (main)

Author: Ariel Rokem <arokem@gmail.com>

Date: Fri Dec 31 20:26:24 2021 -0800

Adds a second line of text

You can also see that HEAD — the current state of the repository — is pointing to the feature_x branch. We also include the second entry in the log, so that you can see that the main branch is still in the state that it was before. We can further verify that by checking out the main branch.

$ git checkout main

If you open the file now, you will see that the feature x line is nowhere to be seen and if you look at the log, you will see that this entry is not in the log for this branch and that HEAD is now pointing to main. But — and this is part of what makes branches so useful — it is very easy to switch back and forth between any two branches. Another git checkout feature_x would bring HEAD back to the feature_x branch and adds back that additional line to the file. In each of these branches, you can continue to make changes and commit these changes, without affecting the other branch. The history of the two branches has forked in two different directions. Eventually, if the changes that you made on the feature_x branch are useful, you will want to combine the history of these two branches. This is done using the git merge command, which asks git to bring all of the commits from one branch into the history of another branch. The syntax of this command assumes that HEAD is pointing to the branch into which you would like to merge the changes, so if we are merging feature_x into main we would issue one more git checkout main before issuing the merge command.

$ git checkout main $ git merge feature_x Updating f1befd7..ab2c28e Fast-forward my_file.txt | 1 + 1 file changed, 1 insertion(+)

The message from git indicates to us that the main branch has been updated — it has been “fast-forwarded”. It also tells us that this update pulled in changes in one file, with the addition of one line of insertion. A call to git log will show us that the most recent commit (the one introducing the “feature x” line) is now in the history of the main branch and HEAD is pointing to both main and feature_x.

commit ab2c28e5c08ca80c9d9fa2abab5d7501147851e1 (HEAD -> main, feature_x)

Author: Ariel Rokem <arokem@gmail.com>

Date: Sat Jan 1 13:27:48 2022 -0800

Adds feature x to the file

This is possible because both of these branches now have the same history. If we’d like to continue working, we can remove the feature_x branch and keep going.

$ git branch -d feature_x

Using branches in this way allows you to rapidly make changes to your projects, while always being sure that you can switch the state of the repository back to a known working state. We recommend that you keep your main branch in this known working state and only merge changes from other branches when these changes have matured sufficiently. One way that is commonly used to determine whether a branch is ready to be merged into main is by asking a collaborator to look over these changes and review them in detail. This brings us to the most elaborate level of using git — in collaboration with others.

Working with git at the third level: collaborating with others

So far, we’ve seen how git can be used to track your work on a project. This is certainly useful when you do it on your own, but git really starts to shine when you put it to work to track the work of more than one person on the same project. For you to be able to collaborate with others on a git-tracked project, you will need to put the git repository somewhere where different computers can access it. This is called a “remote”, because it is a copy of the repository that is on another computer, usually located somewhere remote from your computer. Git supports many different modes of setting up remotes, but we will only show one here, using the GitHub website as the remote.

To get started working with GitHub, you will need to set up a (free) account. If you are a student or instructor at an educational institution, we recommend that you look into the various educational benefits that are available to you through GitHub Education.

Once you have set up your user account on GitHub, you should be able to create a new repository by clicking on the “+” sign on the top right of the web page and selecting “new repository”

On the following page ({numref}`Figure 2`), you will be asked to name your repository. GitHub is a publicly available website, but you can choose whether you would like to make your code publicly viewable (by anyone!) or only viewable by yourself and by collaborators that you will designate. You will also be given a few other options, such as an option to add a README file and to add a license. We’ll come back to these options and their implications when we talk about sharing data analysis code in {numref}`sharing

The newly-created web page will be the landing page for your repository on the internet. It will have a URL that will look something like: https://github.com/<user name>/<project name>. For example, since we created this repository under Ariel’s GitHub account, it now has the URL https://github.com/arokem/my_project (feel free to visit that URL. It has been designated as publicly viewable). But there is nothing there until we tell git to transfer over the files from our local copy of the repository on our machine into a copy on the remote — the GitHub webpage. When a GitHub repository is empty, the front page of the repository contains instructions for adding code to it, either by creating a new repository on the command line or from an existing repository. We will do the latter here because we already have a repository that we have started working on. The instructions for this case are entered in the command line with the shell set to have its working directory in the directory that stores our repository.

$ git remote add origin https://github.com/arokem/my_project.git $ git branch -M main $ git push -u origin main

The first line of these uses the git remote sub-command, which manages remotes, and the sub-sub-command git remote add to add a new remote called origin. We could name it anything we want (for example git remote add github or git remote add arokem), but it is a convention to use origin (or sometimes also upstream) as the name of the remote that serves as the central node for collaboration. It also tells git that this remote exists at that URL (notice the addition of .git to the URL). Addmitedly, it’s a long command with many parts.

The next line is something that we already did when we first initialized this repository — changing the name of the default branch to main. So, we can skip that now.

The third line uses the git push sub-command to copy the repository — the files stored in it and its entire history — to the remote. We are telling git that we would like to copy the main branch from our computer to the remote that we have named origin. The -u flag tells git that this is going to be our default for git push from now on, so whenever we’d like to push again from main to origin, we will only have to type git push from now on, and not the entire long version of this incantation (git push origin main).

Importantly, though you should have created a password when you created your GitHub account, GitHub does not support authentication with a password from the command line. This makes GitHub repositories very secure, but it also means that when you are asked to authenticate to push your repository to GitHub, you will need to take a different route. There are two ways to go about this. The first is to create a personal access token (PAT). The GitHub documentation has a webpage that describes this process. Instead of typing the GitHub password that you created when you created your account, copy this token (typically, a long string of letters, numbers, and other characters) into the shell when prompted for a password. This should look as follows.

$ git push -u origin main Username for 'https://github.com': arokem Password for 'https://arokem@github.com':<insert your token here> Enumerating objects: 12, done. Counting objects: 100% (12/12), done. Delta compression using up to 8 threads Compressing objects: 100% (5/5), done. Writing objects: 100% (12/12), 995 bytes | 497.00 KiB/s, done. Total 12 (delta 0), reused 0 (delta 0), pack-reused 0 To https://github.com/arokem/my_project.git * [new branch] main -> main Branch 'main' set up to track remote branch 'main' from 'origin'.

Another option is to use SSH keys for authentication. This is also described in the GitHub documentation. The first step is to create the key and store it on your computer, described here. The second step is to let GitHub know about this SSH key, as described here. The benefit of using an SSH key is that we do not need to generate it anew every time that we want to authenticate from this computer. Importantly, if we choose the SSH key route for authenticating, we need to change the URL that we use to refer to the remote. This is done through another sub-command of the git remote command:

$ git remote set-url origin git@github.com:arokem/my_project.git

Once we have done that, we can visit the webpage of the repository again and see that the files in the project and the history of the project have now been copied into that website ({numref}Figure 3). Even if you are working on a project on your own this serves as a remote backup, and that is already quite useful to have, but we are now also ready to start collaborating. This is done by clicking on the “settings” tab at the top right part of the repository page, selecting the “manage access” menu item, and then clicking on the “add people” button ({numref}Figure 4). This will let you select other GitHub users, or enter the email address for your collaborator ({numref}Figure 5). As another security measure, after they are added as a collaborator, this other person will have to receive an email from GitHub and approve that they would like to be designated as a collaborator on this repository, by clicking on a link in the email that they received.

Once they approve the request to join this GitHub repository as a collaborator, this other person can then both `git push` to your repository, just as we demonstrated above, as well as `git pull` from this repository, which we will elaborate on below. The first step they will need to take, however, is to `git clone` the repository. This would look something like this:

$ git clone https://github.com/arokem/my_project.git

When this is executed inside of a particular working directory (for example, ~/projects/), this will create a sub-directory called my_project (~/projects/my_project; note that you can’t do that in the same directory that already has a my_project folder in it, so if you are following along, issue this git clone command in another part of your file-system) that contains all of the files that were pushed to GitHub. In addition, this directory is a full copy of the git repository, meaning that it also contains the entire history of the project. All of the operations that we did with the local copy on our own repository (for example, checking out a file using a particular commit’s SHA identifier) are now possible on this new copy.

The simplest mode of collaboration is one where a single person makes all of the changes to the files. This person is the only person who issues git push commands, which update the copy of the repository on GitHub and then all the other collaborators issue git pull origin main within their local copies, to update the files that they have on their machines. The git pull command is the opposite of the git push command that you saw before, syncing the local copy of the repository to the copy that is stored in a particular remote (in this case, the GitHub copy is automatically designated as origin when git clone is issued).

A more typical mode of collaboration is one where different collaborators are all both pulling changes that other collaborators made, as well as pushing their changes. This could work, but if you are working on the same repository at the same time, you might run into some issues. The first arises when someone else pushed to the repository during the time that you were working and the history of the repository on GitHub is out of sync with the history of your local copy of the repository. In that case, you might see a message such as this one:

$ git push origin main To https://github.com/arokem/my_project.git ! [rejected] main -> main (fetch first) error: failed to push some refs to 'https://github.com/arokem/my_project.git' hint: Updates were rejected because the remote contains work that you do hint: not have locally. This is usually caused by another repository pushing hint: to the same ref. You may want to first integrate the remote changes hint: (e.g., 'git pull ...') before pushing again. hint: See the 'Note about fast-forwards' in 'git push --help' for details.```

Often, it would be enough to issue a `git pull origin main`, followed by a `gitpush origin main` (as suggested in the hint) and this issue would be resolved –your collaborator’s changes would be integrated into the history of therepository together with your changes, and you would happily continue working.But in some cases, if you and your collaborator introduced changes to the samelines in the same files, git would not know how to resolve this conflict, andyou would then see a message that looks like this: